ChatGPT: Revolution or threat?

ChatGPT: between technological revolution and confidentiality dilemma. Discover the potential and the pitfalls.

Since OpenAI's global deployment of ChatGPT, opinions have diverged. Some believe it will revolutionize the way we live and work, while others express concern about its potential for disruption, particularly with regard to the privacy of individuals and organizations. Incidents have already occurred such as sensitive information being exposed, and employees having to face consequences following the entry of confidential company data into the chatbot. An example:

Samsung has taken action following revelations that three of its employees leaked sensitive data using ChatGPT. Workers were allowed to use the chatbot to experiment with fixing problems in the company's source code. SOURCE: TECHMONITOR.AI

Some countries have even imposed a temporary ban on its use in an effort to protect data.

So let's ask ourselves the following questions:

What exactly does ChatGPT do with our information and how can we use it securely?

What are the benefits in terms of cybersecurity?

What are the risks?

The following article will try to answer as many important questions around ChatGPT as possible.

ChatGPT ?

Before we get into the risks, Q&A, benefits and everything else, do you know what CHATGPT is?

If so, read on anyway.

If not, please read the rest carefully.

And what better way to define ChatGPT than ChatGPT itself!

ChatGPT:

ChatGPT is an artificial language model developed by OpenAI. Based on the GPT (Generative Pretrained Transformer) architecture, it is capable of autonomously generating text, answering questions, writing essays, creating and debugging code, solving complex mathematical equations and even translate between different languages.

It was "trained" on a large amount of data from the Internet, including books, Wikipedia, articles and other online content. This gives him the ability to understand and generate text conversationally, although he does not understand the meaning of the text in the same way a human would.

It's important to note, although ChatGPT is capable of producing impressive responses, that its responses are based on patterns in the data it was trained on, not an actual understanding of the world.

SOURCE: CHATGPT

However, as a natural language processing tool, its operation is based on probability. It generates answers by predicting the next word in a sentence, based on the millions of examples it was trained on.

ChatGPT and cybersecurity

Is our data safe?

ChatGPT stores user requests/prompts and responses to continually refine its algorithms.

Even after your interactions are deleted, the bot retains the ability to use this data to improve its AI capabilities. This situation could potentially be risky if users enter sensitive personal or organizational data that could be attractive to malicious entities in the event of a data breach.

Additionally, it also archives other personal details while in operation, such as the user's approximate geographic location, IP address, payment information, and device specifications (although this type collection of data is common for most websites for analytical purposes, and therefore, not exclusive to ChatGPT). The data collection techniques deployed by OpenAI have raised concerns among some researchers, given that the data extracted could encompass copyrighted material.

Malicious users

It appears that ChatGPT is increasingly being exploited for malicious purposes. His code writing skills can be used to create malware, develop dark websites, and execute cyber attacks.

Several cases of malicious code writing, phishing emails and other cyberattack techniques have been reported by users. Such as the orchestration of very sophisticated phishing attacks. By improving the language used, ChatGPT removes the telltale signs of poor spelling and grammar often associated with phishing attempts.

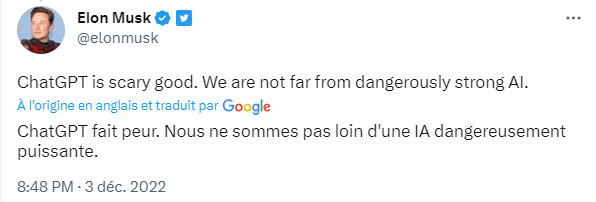

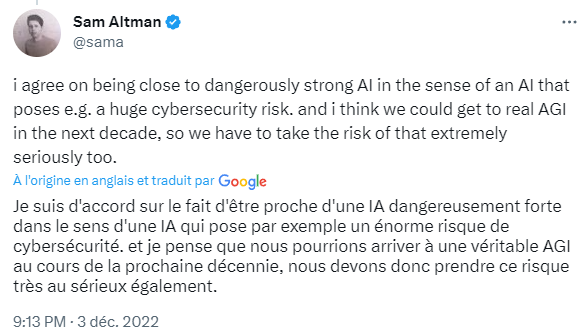

Additionally, it has been used to better understand the psychology of targeted recipients, with the aim of inducing stress and thus increasing the effectiveness of phishing attacks. In March 2023, more than a thousand AI experts, including OpenAI co-founders Elon Musk and Sam Altman, advocated for an immediate halt to production of major generative AI tools for at least six months. This pause should allow researchers to understand the risks associated with these tools and develop mitigation strategies.

Already known flaws?

What data breaches have occurred so far? OpenAI confirmed that a bug in the chatbot's source code may have caused a data leak in March 2023, allowing some users to see parts of another active user's chat history. It is also possible that payment information for 1.2% of ChatGPT Plus subscribers who were active during a given period was made available.

OpenAI released a statement saying that the number of users whose data was exposed is "extremely small" because they would have had to open a subscription email or click on certain functions in a specific sequence during a given period to be assigned. However, ChatGPT was taken offline for several hours while the bug was fixed.

Prior to this, Samsung experienced three separate incidents in which confidential company information was fed into the chatbot (Samsung source code, the transcript of a company meeting, and a test sequence to identify defective chips), which gave rise to disciplinary investigations.

The data has not been leaked as far as we know, but as mentioned above, everything entered into ChatGPT is stored to train its algorithm, so confidential information entered by Samsung staff members are, in theory, now available to anyone using the platform.

What if we asked CHATGPT directly

OpenAI says it conducts annual testing to identify security weaknesses and prevent them from being exploited by malicious actors. It also runs a "bug reward program", inviting researchers and ethical hackers to test system security for vulnerabilities in exchange for a cash reward.

Protection

As with all digital applications, ChatGPT states:

"If you have any concerns about the privacy or security of your interactions with ChatGPT, you are advised to avoid sharing any personally identifiable or sensitive information. Although OpenAI aims to provide a secure environment, it is essential to do exercise caution when interacting with AI systems or any online platforms.”

Another general tip is to create a strong, unique password and close the app after using it, especially on shared devices. It is also possible to refuse that ChatGPT stores data by filling out an online form:

Formulaire: User Content Opt Out Request

In April 2023, OpenAI introduced a new feature allowing users to turn off chat history. Conversations started after enabling this feature are not stored to train the algorithm and do not appear in the history sidebar, but are retained for 30 days before being permanently deleted.

Benefits

SOURCE: TECHTARGET

-> The images below are generated by MidJourney:

Midjourney is an independent research laboratory which produces an artificial intelligence program under the same name and which allows the creation of images from textual descriptions, following an operation similar to that of OpenAI's DALL-E.

Cyber Defense Automation

ChatGPT could help analysts in overworked security operations centers (SOCs) by automatically analyzing cybersecurity incidents and making strategic recommendations to help inform immediate and long-term defense measures.

For example, rather than analyzing the risk of a given PowerShell script from scratch, a SOC analyst could rely on ChatGPT's assessment and recommendations. SecOps teams can also ask OpenAI more general questions, such as how to prevent dangerous PowerShell scripts from running or loading files from untrusted sources, to improve their overall security measures. organization.

Opponent simulation

ChatGPT's settings mean it won't respond to requests it considers suspicious, but users continue to discover vulnerabilities. For example, if ChatGPT is asked to write ransomware code, it will refuse to do so. But many cybersecurity researchers have indicated that by describing relevant tactics, techniques and procedures - without using signal words such as malware or ransomware - they can trick the chatbot into producing malicious code.

ChatGPT's creators will likely attempt to close these loopholes as they appear, but it seems plausible that attackers will continue to find workarounds. The good news is that penetration testers can also use these flaws to simulate realistic adversary behavior across various attack vectors in an effort to improve defensive controls.

Cybersecurity Reports

Detailed cybersecurity incident reporting plays a vital role in helping key stakeholders – i.e. SecOps teams, security managers, business executives, auditors, board members administration and business sectors - to understand and improve the security posture of an organization.

However, producing incident reports is a long and tedious job. Cybersecurity practitioners could use ChatGPT to write reports by providing the application with details such as the following:

The target of the compromise or attack.

Scripts or shells used by attackers.

Relevant data from the IT environment.

By offloading some cybersecurity reporting tasks to ChatGPT, incident responders could spend more time on other critical activities.

In this way, generative AI could help alleviate issues of burnout and understaffing in the cybersecurity field.

Threat Intelligence

Threat researchers today have access to an unprecedented amount of cybersecurity intelligence from sources such as their enterprise infrastructure, external threat intelligence feeds, breach reports, publicly available data, the dark web and social media. Although knowledge is a power, humans cannot coherently analyze and synthesize such an amount of information in a meaningful way.

Generative AI, on the other hand, could soon do the following almost instantly:

Consume vast volumes of threat intelligence data from various sources.

Identify patterns in data.

Create a list of new adversary tactics, techniques and procedures.

Recommend relevant cyber defense strategies.

With ChatGPT, cybersecurity teams could potentially be able to gain a complete, accurate and up-to-date understanding of the threat landscape in an instant, in order to adjust their security controls accordingly.

Your opinion?

I am interested in your opinion:

What do you think of CHATGPT or any other AI?

Do you have any fears or on the contrary are you very enthusiastic?

Do you see limits, potential or just a trend?

React or leave me your question via the form ulaire 😄

Last updated